Getting Started

Joined the team after their initial launch, they were trying to figure out product-market fit, how people were utilising the platform and how to get them onboarded.

Different use cases for the enterprise metaverse

To learn more, I’ve conducted 12 interviews with existing users and virtual field studies with 6 group of users entering the world for the first time. The finding were as follows:

9 / 12 - Wanted to use their world to promote and sell some kind of product or service

5 / 12 - Were having problems with controlling their avatar

4 / 12 - Had issues connecting to the audio channel

4 / 12 - Had problems with navigating the world

2 / 12 - Had connection issues

The majority of our users it seems were utilising the metaverse for commercial use cases.

The UI at the time had many issues, as people were struggling to connect to the audio channel, mute themselves or even navigate within the virtual environment.

We wanted to create an interface that resembles traditional meeting tools like Google Hangouts and Zoom. This would lower the barrier of entry and make the transition to 3D environments easier.

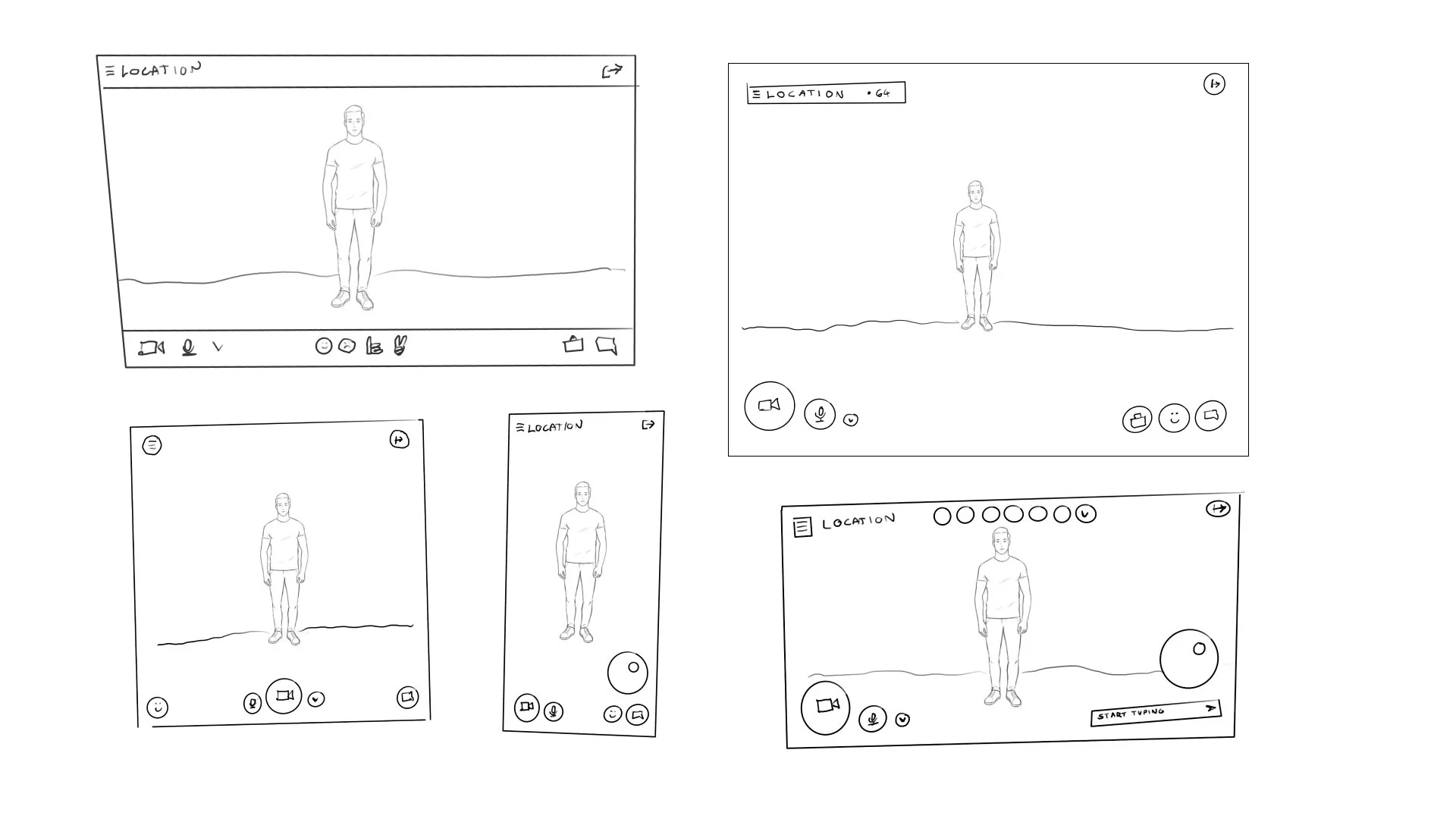

Sketches from the design sprint diverge stage on different layouts

To come up with the new UI I’ve organised a three day design sprint that involved development and the executive team. The goal was to understand what our users wanted and what were the structural issues that got in the way. In the end we created two options for additional validation.

Option A involved a transparent bottom bar and portrait orientation on mobile

Option B was what we called a ‘bubble style’ layout with horizontal orientation on mobile.

To decide on the viable option 6 users were interviewed and tested on prototypes, 3 on option A and 3 on option B. Since users were having issues with the transparent bar obscuring the view and they preferred the horizontal layout we decided to continue with the ‘bubble style’ version.

Onboarding

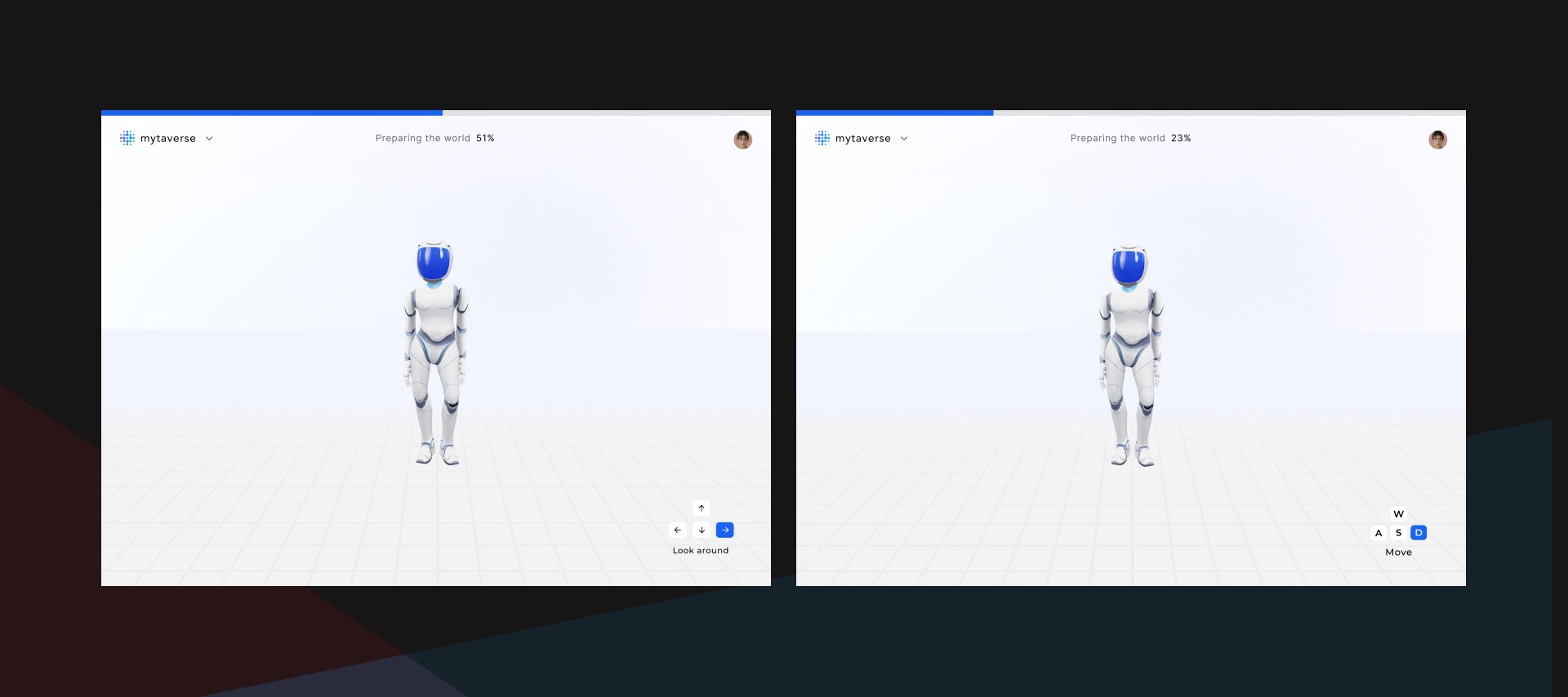

Many users were not familiar with video game controls and we had several initiatives to combat this. One of the first was a virtual lobby area where users could try out controls on their own while waiting for the game to load. The problem was that many users confused the lobby as the actual game itself and as we gradually decreased the time to load it made sense to design something more lightweight.

The lobby area with a working avatar to play with

This is how we ended up with a more traditional loading screen that allowed people to familiarise themselves with the controls while clicking through helpful tips and hints.

Useful tips & hints while the game loads

Another initiative was the addition of a helpful bot that users could invoke at any time by speech or text. The bot ‘S.A.R.A’ (Sentient, Autonomous, Robot, Aid) would provide helpful instructions from the knowledge base and vocal answers using a machine learning generated voice.

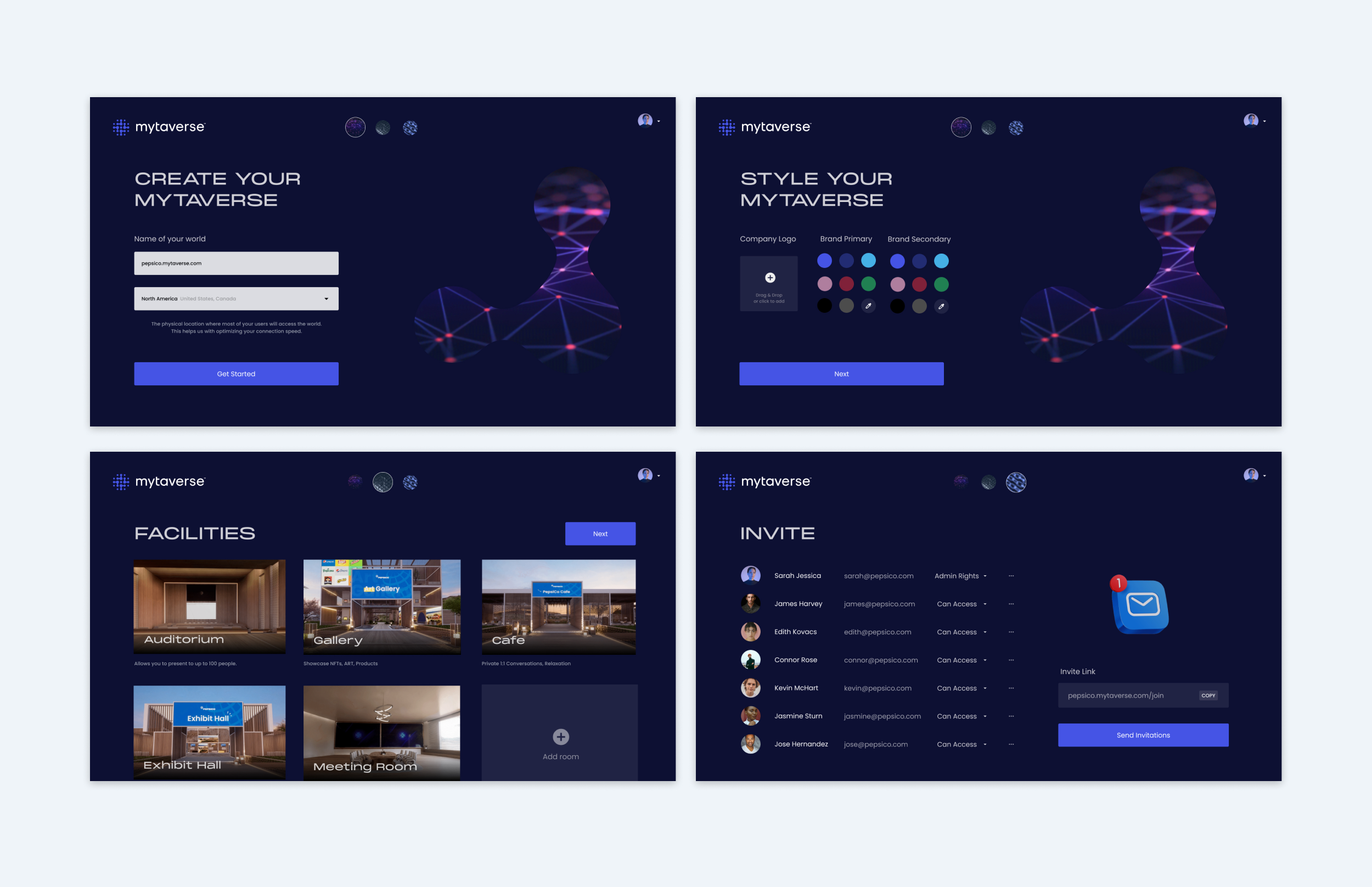

Custom Worlds

Most or our clients wanted a world they can call their own. This meant custom avatar skins, brand colors and assets across the interface and the 3D environments. We also split tested variations on what kind of login interfaces clients wanted and with what login options available.

A/B Split Test Variations

Branded login for Dassault Aviation

Branded and Non-branded variation for the avatar selection screen

Video Conferencing

The addition of video conferencing was a huge milestone for the project. We wanted to design something that incorporates the feeling of being in a three dimensional environment. The ideas was to show participants based on the rotation and distance from the user’s avatar. As the user changes the view and starts moving the videos would rotate as well, similar to a compass.

Illustration on how the video mechanism works

Rotational video in action